These sections provide explanations and details on important terms used by the Yobe library.

Audio buffers are arrays that contain audio sample data. They are a fixed size and are passed into the Yobe processing functions which outputs processed versions of the buffers. The audio buffer data must be interleaved as well and must be either 16-bit PCM or double encoded. The data is interleaved by pairing the samples from mic 1 followed by mic 2 in order. The buffer sizes can be 128ms, 256ms, 512ms, or 1024ms long at 16kHz, however this size is built into the library and can be verified using com.yobe.speech.Util.GetBufferSizeTime.

Automatic Speech Recognition is a process through which audio can be transcribed into text to perform a specific function. The Yobe library output works well with various ASR engines by providing them with noise free audio data.

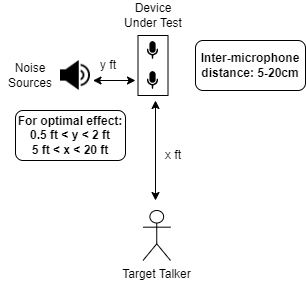

This is the audio capture scenario where the target voice is at a relatively farther distance from the device under test, as compared to the noise to be suppressed.

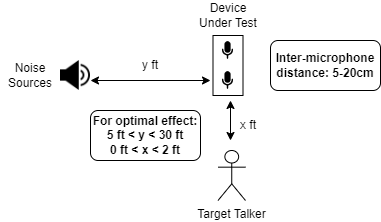

This is the audio capture scenario where the target voice is at a relatively closer distance from the device under test, as compared to the noise to be suppressed.

The voice template is an audio template related to a specific user that can be used as a reference to be able to identify said user.